Article by Sebastien Boissier

Deterministic sources by Quandela

This is part three of our series on single-photon sources. Building on our previous posts, we finally have the necessary context to discuss Quandela’s core technology and how it will accelerate the development of quantum-photonic technologies towards useful applications.

Atoms

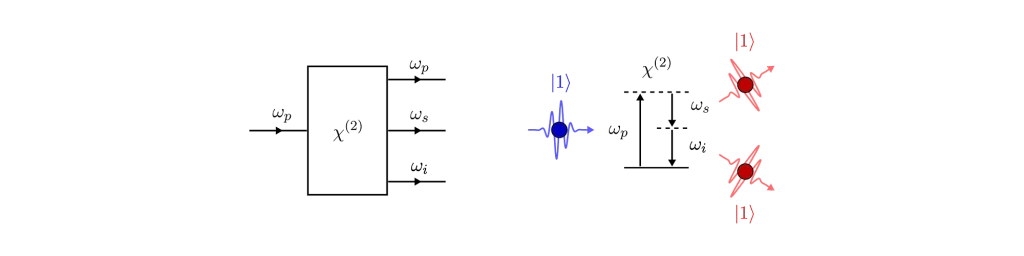

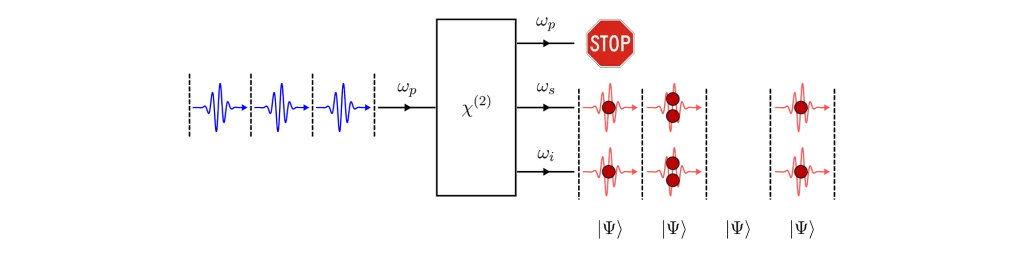

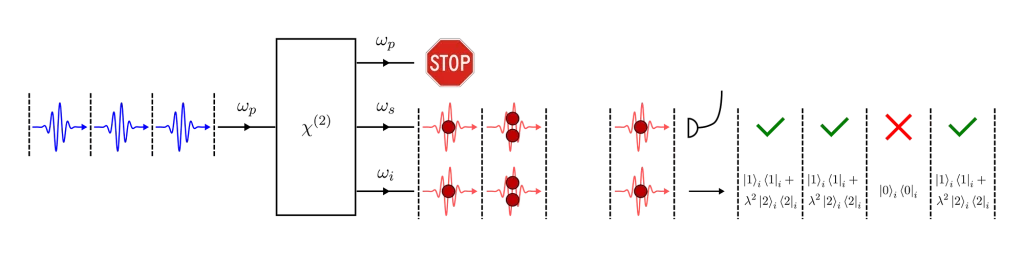

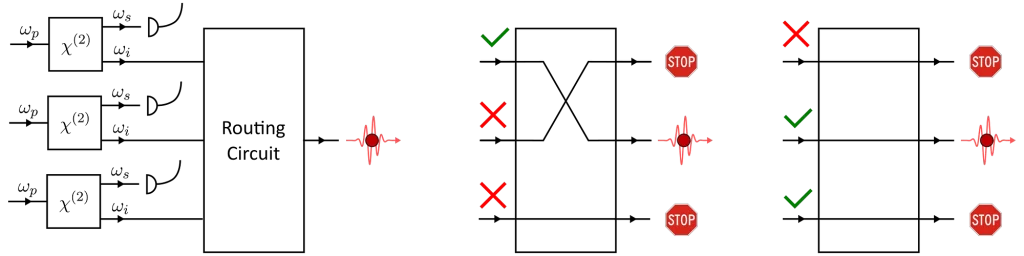

Even though heralded photon-pair sources are a well-known technology, it does seem to be a bit of a work-around to achieve our primary goal of generating pure single-photons. The fact that they are inherently spontaneous is an issue, and we would prefer to avoid multiplexing which is resource hungry. OK then, is there another approach?

Atom-light interaction

An elegant idea comes from our knowledge of how light interacts with single quantum objects such as atoms. In this context, what we really mean by a “single quantum object” are the quantised energy levels of a bound charge particle. In a hydrogen atom for example, the electron is trapped orbiting around the much-heavier proton and it can only exist in one (or a superposition of) discrete quantum states.

When we shine a laser on an atom, we can drive transitions between two states. For example, the electron can be promoted from its ground state to a higher-energy excited state. In general, there are certain constraints that the pair of states must satisfy for transitions to occur under illumination. These are broadly called selection rules and correspond to various conservation laws that the interaction must obey.

In our case, we are interested in dipole-allowed transitions which result in strong atom-light interaction. To drive an electric dipole transition, the laser must be resonant (or near-resonant) with the energy difference separating the two states. This means that we have to tune the angular frequency of the laser ωₗₐₛₑᵣ such that the photon energy ħωₗₐₛₑᵣ is equal (or near-equal) to the difference in energy.

When this is done, the electron starts to oscillate between the ground and excited levels, creating quantum superposition of the two states in between. This phenomenon is called Rabi flopping. If we control the exact duration of the interaction (i.e. the amount of time the laser is on) we can deterministically move the electron from the ground to the excited state, and vice-versa.

Spontaneous emission

As with spontaneous parametric down-conversion (see part 2), the vacuum fields also play an important role here. When the electron is in the excited state, it doesn’t stay there forever. The presence of vacuum fluctuations cause the electron to decay back to the ground state in a process called spontaneous emission (in fact it’s a little more complicated than that, but this is a fine description for our purposes). Spontaneous decay of the excited state is not instantaneous, and typically follows an exponential decay with lifetime T or decay rate Γ=1/T .

Additionally, there’s another property of dipole-allowed transitions which is of great interest to us. As one might have guessed from the resonant nature of the interaction (i.e. energy conservation), the full transition of the electron from the ground state to the excited state (or vice-versa) is accompanied by the absorption (or emission) of a single photon from the laser. And more importantly, spontaneous decay from the excited state to the ground state always comes with the emission of a single photon.

So here we have a recipe to create a single photon. We first use a laser pulse to excite the electron to the excited state, and then we wait for the electron to decay back to the ground state by emitting a single photon.

Note however, that the laser pulse has to be short compared to the lifetime of the excited state. Re-excitation of the emitter can happen as soon as there is some probability that the electron is in the ground state. Therefore, if the laser pulse is still on when spontaneous emission has already started, there is a chance that the emitter will spontaneously emit, get re-excited and emit again. This has the undesirable effect of producing two photons within our laser pulse.

We can now see the major difference between the scheme based on spontaneous parametric down-conversion and the one based on spontaneous emission. With the latter, we can use short laser pulses to actively suppress the probability of multi-photon emission (and minimise the g⑵ ) without compromising on brightness.

The brightness is unaffected because the electron will always emit a single photon if it is completely promoted to its excited state. This is why sources based on such emitters are usually referred to as “deterministic” sources of single photons.

Collecting photons

Dipole radiation

So, can it be that simple? Well, there is one thing we haven’t considered yet: where the photons get emitted. And unfortunately for us, single-photons tend to leave the atom in every direction.

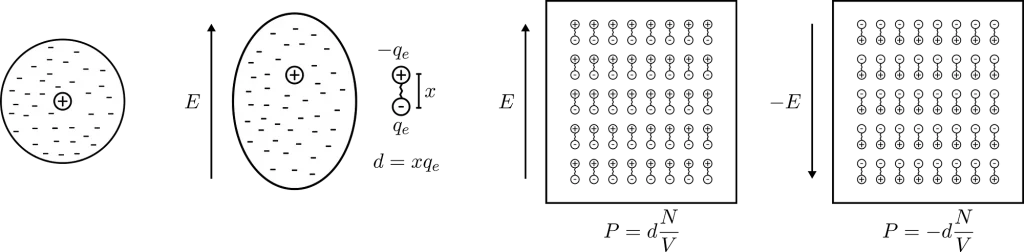

To be more precise, spontaneous emission (from a linearly-polarised dipole-allowed transition) follows the radiation pattern of an oscillating electric dipole, similar to that of a basic antenna. Recall that a radiating dipole is two opposite charges oscillating about each other on a fixed axis.

The fact that dipoles come up again here is not a coincidence. The rules of dipole-allowed transitions and dipole radiation stem from the same approximation that the wavelength of the emitted light is much greater than the physical size of the atom.

On the left, we show the electric field generated by an oscillating dipole oriented in the vertical direction. We see that, apart from the direction of the dipole, the field propagates rather isotropically, and there is no preferred direction in the horizontal plane. This is essentially because the system has rotational symmetry.

If we look far away from the dipole, we can calculate the optical intensity that is emitted in space for every direction. This graph gives us the probability per steradian that a single photon is emitted at every angle. The redder the colour, the more likely a photon will emerge in that direction.

It should be rather obvious why the above figure is bad for us. To collect all the photons into a light-guiding structure like an optical fibre, we would require an impossible system of lenses and mirrors to redirect all the emission back into the fibre.

And if we lose a large portion of the emitted photons, our deterministic source becomes a low-brightness one, limiting the scalability of our quantum protocols.

The Purcell effect

To help us collect more photons from atoms, we can rely on an amazing fact of optics: the lifetime and radiation pattern of an emitter depend on its surrounding dielectric environment, a phenomenon called the Purcell effect. In fact, the radiation pattern we have shown above is only true for an atom placed in a homogeneous medium.

You might think: “Well, that sounds trivial. If I put an optical element like a lens in front of the atom, I am guaranteed to change it’s emission pattern in some way”. And indeed you would be right, but here we are looking at something a little more subtle.

We mentioned above that spontaneous emission is caused by vacuum field fluctuations. It turns out we can engineer the strength of these fluctuations at the position of the atom to accelerate or slow down spontaneous emission in certain directions. To do this, we have to build structures around the atom which are on the scale of the emitted light’s wavelength (λ).

Let’s look at an example: the emitter placed in between two blocks of high refractive-index material (like gallium arsenide — GaAs).

The important parameter here is the Purcell factor: the ratio of the emitter’s lifetime in the structure divided by its lifetime in the bulk material (here it’s just air). As the distance between the blocks (d) decreases, the lifetime of the emitter varies widely (as quantified by the Purcell factor). What’s going on?

Optical modes

The magnitude of the vacuum field fluctuations depends on a couple of things. First, it depends on the number of solutions there are to Maxwell’s equations at the frequency of the emitter (the so-called density of optical modes). With an increasing number of available modes, there are more paths by which light can get emitted by the atom, and spontaneous emission is sped up.

Secondly, we have to consider how localised the electric field fluctuations of the modes are. An optical mode that is spread out in space does not induce strong vacuum fluctuations at one particular position. Whereas a localised mode concentrates its vacuum field where it is confined in space.

If one particular mode has larger local fluctuations than others, the atom preferentially decays by emitting light into this mode (larger fluctuations translate to high decay rates). The radiation profile is therefore changed because the emission will mostly resemble the profile of the dominant mode.

This is what we are seeing in the above experiment. As the separation between the two blocks decreases, cavity modes come in and out of resonance with the emitter. Cavities are dielectric structures which trap light using an arrangement of mirrors. Here, the cavity is formed from the reflections off the high-index material causing the light to bounce back and forth in the air.

Cavity modes only appears at the frequency of the emitter when we can approximately fit an integer number of half-wavelengths between the blocks, creating a standing-wave pattern in their electric field profile.

In our example however, we only see those modes with an integer number of full-wavelengths. This is because the other modes do not induce any electric field fluctuations exactly at the middle point between the blocks, where we placed our emitter. There is a node in their standing wave pattern. Therefore, no spontaneous emission from the atom goes into these modes, and they don’t affect its lifetime.

In contrast, modes with an integer number of full-wavelengths between the mirrors have the maximum of their fluctuations at the centre (anti-node of the standing wave). Therefore, they strongly affect the lifetime of the emitter and its radiation pattern.

Trapping light

By trapping light with mirrors, we have seen how a cavity mode can induce strong vacuum fluctuations at the position of an atom, and therefore funnel its spontaneous emission in a desired direction. It is important to note that the confinement of cavity modes not only depends on how close the mirrors are to each other, but also on how long the light stays inside the cavity. A “leaky” cavity that does not trap light for a long time also does not induce strong vacuum fluctuations.

The cavity we have studied above can only trap light in the vertical direction. We see that the modes have an infinite extent in the horizontal plane because of the continuous translational symmetry. In addition, they do a rather poor job at that. The reflectivity between air and GaAs is only about 30%, meaning that on each bounce 70% of the light is lost upwards or downwards.

So first, we could use better mirrors. A great way to build a mirror is to alternate thin layers of high and low refractive-index materials. These so-called Bragg mirrors can be engineered to have extremely high reflectivity at chosen wavelengths. This is achieved by making sure that each layer fits a quarter-wavelength of light, so that reflections of all the interfaces interfere constructively.

Next, we have to confine the mode in 3D. One way to do that is to curve one (or the two) Bragg mirrors to compensate for the diffraction of light inside the cavity. Our preferred method is to structure the stack of layers into micropillars. The high refractive-index contrast between the pillar and the surrounding air confines the light inside the pillar due to index-guiding (this is similar to the mechanism by which light is guided by an optical fibre).

By making these structures, we get cavity modes that are highly localised and that trap light for a long time. If an atom is placed at an anti-node of the cavity’s electric field, the probability of the emission going into the cavity mode will be much greater than that of the atom emitting in a random direction.

The final piece of the puzzle is what happens to the single-photon once it has been emitted by the atom and is trapped inside the cavity. The Bragg mirrors are designed to keep the light in for a long time, so the photons will mostly leave the cavity from the sides in random directions. This defeats the point of having a cavity in the first place!

To design a good single-photon source, we have to make one mirror less reflective than the other (with less Bragg layers for example) so that the photons preferentially leave the cavity through that mirror only. This is how we achieve near-perfect directionality of the source. Finally, by placing a fibre close to the output mirror, we can then collect the emitted single-photons with high probability.

Note however, that this is a compromise. By reducing the reflectivity of one mirror, the mode of the cavity is not as long-lived, which reduces the vacuum-field fluctuations and therefore decreases the probability that the atom emits into the cavity mode in the first place. Careful engineering of the structure has to be made to strike the right balance.

Quantum dots

It is a hard technological challenge to build the microstructures for the efficient collection of photons. An even harder task is to place an atom at the exact spot where the vacuum-field fluctuations of the cavity mode are at their maximum. While this can be done with single atoms in a vacuum, this involves a very complex procedure and bulky ancillary equipment.

Trapping electrons

Another way to proceed is to find quantum emitters directly in the solid-state. In the last few decades, a number of ways have been found to isolate the quantum energy levels of single electrons inside materials. The approach that we are leveraging is that of quantum dots.

Quantum dots are small islands of low-bandgap semiconductor material, surrounded by a higher bandgap semiconductor. Because of the difference in bandgap, some electronic states can only exist inside the low-bandgap material. The confinement of electronic states to a few nanometres creates discrete quantum states in a way that is very similar to the classic particle in a box model in quantum mechanics.

This arrangement gives us access to bound electronic states very much like an atom does. That is why quantum dots are sometimes referred to as artificial atoms in the solid-state.

Quantum dots are small islands of low-bandgap semiconductor material, surrounded by a higher bandgap semiconductor. Because of the difference in bandgap, some electronic states can only exist inside the low-bandgap material. The confinement of electronic states to a few nanometres creates discrete quantum states in a way that is very similar to the classic particle in a box model in quantum mechanics.

This arrangement gives us access to bound electronic states very much like an atom does. That is why quantum dots are sometimes referred to as artificial atoms in the solid-state.

We can then use the same procedure described above to generate single-photons from these states. In this case, we use a pulse laser to promote one electron from the highest occupied state in the conduction band of the dot to the lowest unoccupied state in its valence band.

Compared to the hydrogen atom, the absence of an electron in the valence band is more significant. This ‘hole’ acts as its own quasiparticle, and the effect of the laser pulse is to create a bound state of an electron and a hole, called an exciton (another quasiparticle). The exciton doesn’t live forever. The vacuum field fluctuations cause the electron to recombine with the hole and in doing so a single-photon is created.

Suppressing vibrations

The electronic levels are not completely isolated from the solid-state environment there are in. The most important source of noise comes from temperature: the jiggling of the atoms in the semiconductor.

Temperature affects the quantum dots in a couple of ways. First, the presence of vibrations in the crystal’s lattice adds an uncertainty in the energy difference between the electronic levels (technically, they induce decoherence of the energy levels). This has a detrimental effect on the indistinguishability of the emitted photons which will also have this added uncertainty in their frequencies.

The second effect is that the emitter can emit (or absorb) vibrations of the surrounding crystal during the photon emission process. Because the vibrations carry energy, the emitted photons will again have very different frequencies from one emission event to the other, reducing indistinguishability.

The solution to this is to cool the sample to cryogenic temperatures to get rid of the vibrations inside the material. For quantum dot emitters, cooling the samples between 4K and 8K is sufficient to suppress the thermal noise. This is the realm of closed-cycle cryostats, which are much less demanding than the more complicated dilution refrigerators.

Suppressing the laser

Another important consideration for quantum emitters is how to separate the emitted photons from the laser pulses. If we cannot distinguish between the two, the single-photon output is simply drowned out by the laser noise.

Typically, it’s very hard to completely avoid laser light reaching the output of the single-photon source. A good solution here is to use an additional energy level of our quantum emitter.

The key is to find two optical transitions which are separated in frequency (or addressed by orthogonal polarisations of light) such that we can filter out the laser (using optical filters or polarisers) from the single-photons at the output. The excited states must be connected in some way to efficiently transfer the electron to the extra state during the laser excitation.

With dots we have access to two different ways to do that. First, we can use the solid-state environment to our advantage by leveraging the coupling of the emitters to the vibrations of the crystal. By using non-resonant laser pulses, we can force the emission of an acoustic phonon to efficiently prepare an exciton.

The second option we have with quantum dots is to use two electronic states which interact with orthogonal polarizations of light. This is a particularly good method when combined with elliptical cavities.

Putting everything together

At Quandela we benefit from decades of fundamental research in quantum dot semiconductor technology. This allows us to bring all the elements together to fabricate bright sources of indistinguishable single-photons.

Importantly, we use a unique method (developed in the lab of our founders) to deterministically place single quantum-dots at the maximum of the vacuum-field fluctuations of micropillar cavities. In this way, we make the most out of the Purcell effect.

In the figure above, we show a 3D rendering of our single-photon sources. As discussed above, they consist of a quantum dot at the anti-node of a long-lived cavity mode which preferentially leaks through the top mirror towards an output fibre.

The ‘cross’ frame around the pillar cavity is there to make electrical connections to the top and bottom of the semiconductor stack. This allows us to control the electric environment of the dots and tune its emission wavelength with the Stark effect.

With the current generation of devices, we simultaneously achieve an in-fibre brightness of > 30%, a g⑵ < 0.05 and a HOM visibility > 90% (see part 1). With these state-of-the-art performances, we believe that large-scale quantum photonics applications are within reach.

A quick note on scalability

More complex applications in quantum photonics require multiple photons to arrive simultaneously on a chip. The obvious route here is to have multiple sources firing at the same time to provide single-photons in multiple input fibres.

While it is generally more difficult for remote sources to generate identical photons (i.e. with perfect HOM visibility), recent results suggest that the reproducibility of our fabrication process is the key to large-scale fabrication of identical sources.

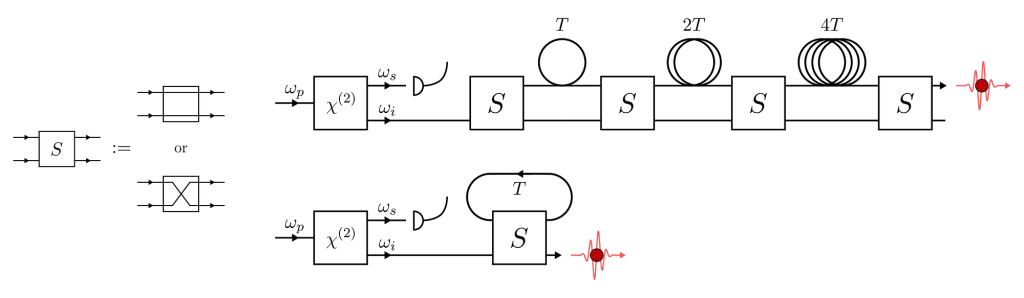

Additionally, we can use active temporal‐to‐spatial demultiplexing to take advantage of the indistinguishability of the photons coming from the same source. This technique can be thought as the inverse of the temporal multiplexing that we saw in part 2 for spontaneous pair-sources.

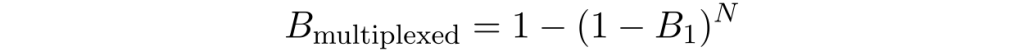

Starting from a train of single-photons, we switch and delay some of the photons to get several spatial inputs. With a demultiplexer, we reduce the repetition rate of our source to achieve synchronised inputs. The n-photon coincidence rate is then given by

where (as we defined in part 1) μ is the detection efficiency, B the source efficiency and 𝜂 is the demultiplexer efficiency. R is the repetition rate of the laser pulses.

Conclusion

In this series, we have reviewed the state-of-the-art technology for producing single-photons on demand. We have explored in some detail the underlying principles of spontaneous-parametric-down-conversion and atom-like quantum emitters and highlighted their differences.

At Quandela, we feel that quantum-dot sources have great potential for the miniaturisation and scalability of optical-qubit generators. We are working hard to improve their performances and to make them accessible to a wider academic and industrial audience.

If you would like to know more about our technology please email contact@quandela.com or visit our website https://quandela.com/ . If you would like to join our mission to build the world brightest sources of optical qubits, please have a look at our job openings at https://apply.workable.com/quandela/ .