Quantum computing stands as one of the most important technological revolutions in computational science that will reshape the scene of how data scientists tackle complex problems. Recent breakthroughs show quantum processors can now complete tasks in minutes while classical supercomputers would need thousands of years [-1].

The core principles of quantum mechanics power quantum computing to process information differently than classical computers. Traditional bits operate in states of either 0 or 1, but quantum bits (qubits) can exist in multiple states at once through superposition. Quantum computing applications now span many fields from cryptography to drug discovery. Machine learning algorithms have shown promising developments in artificial intelligence. These quantum algorithms could speed up complex model training and solve optimization problems that classical methods cannot handle [-2].

Data scientists now find themselves at a vital intersection where expertise in both domains adds increasing value. This piece explores the fundamental concepts, practical applications, and implementation strategies you need to add quantum computing to your data science toolkit.

Understanding the Basics of Quantum Computing

The science behind quantum computing works quite differently from traditional computing systems. Classical computers work with binary operations, while quantum computers tap into strange behaviors of subatomic particles. These particles perform calculations that go beyond conventional logic.

What is quantum computing?

Quantum computing uses quantum mechanical phenomena to process data. Unlike classical computers that use electrical signals for bits (0s and 1s), quantum computers work with quantum bits or qubits. These qubits can exist in multiple states at once. This technology marks a big shift from classical computing methods because it processes information through quantum states instead of simple binary logic.

A quantum computer has a collection of qubits that work together to run quantum algorithms. These special algorithms use quantum properties to solve certain problems faster than any classical computer. Today’s quantum computers are mostly experimental devices. They need very controlled conditions and temperatures close to absolute zero to maintain quantum coherence.

How does quantum computing work?

Quantum computers work by manipulating qubits through quantum gates and circuits. The main principles behind this are:

Superposition: Classical bits must be either 0 or 1. Qubits can be both values at once. This lets quantum computers handle multiple possibilities simultaneously.

Quantum Gates: These gates work like classical logic gates but change qubit states. They perform unitary transformations that keep quantum information intact.

Measurement: Qubits turn into classical states (0 or 1) when measured. Their pre-measurement quantum state determines these probabilities.

A typical quantum computation follows these steps:

- Qubits start in a known state

- Quantum gates transform these states

- Measurements give classical output

Several quantum computing architectures exist today. These include superconducting circuits, trapped ions, and photonic systems. Each type comes with its own benefits and challenges. Photonic quantum computing shows great promise for specific tasks, especially in image recognition.

Key differences from classical computing

Quantum and classical computing differ in both theory and practical performance:

| Aspect | Classical Computing | Quantum Computing |

| Basic unit | Bit (0 or 1) | Qubit (superposition of states) |

| Processing | Sequential operations | Parallel quantum operations |

| Scaling | Linear increase in power with resources | Potentially exponential increase |

| Parameter count | Often requires millions of parameters | Can achieve comparable results with fewer parameters |

Tests show remarkable efficiency gaps. A quantum CNN reached 89% accuracy using just 926 parameters. A classical CNN needed 3,681 parameters to hit 93% accuracy on the same task. Some quantum models achieved 95.5% accuracy with only 3,292 parameters, while classical models needed 6,690 parameters to reach 96.89% accuracy.

Quantum computing brings new approaches to machine learning problems. Quantum transfer learning helps adapt pre-trained models to new tasks. These methods can reach accuracy rates above 97% and need fewer computing resources than classical approaches.

Yet quantum computing faces big challenges. These include error rates, decoherence issues, and complex programming needs. Most practical applications now use hybrid quantum-classical approaches that combine the best of both worlds.

Why Data Scientists Should Care About Quantum AI

Data science is moving faster than ever, and quantum artificial intelligence (quantum AI) stands as a revolutionary force that promises to break through the limitations of classical machine learning. A new paradigm emerges as quantum computing joins with AI, and data scientists can’t ignore its potential.

Limitations of classical machine learning

Traditional machine learning has made great strides, but it faces some real constraints. Classical models hit a wall when it comes to computing efficiency as problems get more complex. The numbers tell the story – classical CNNs need many more parameters to match their quantum counterparts. A classical CNN needed 6,690 parameters to hit 96.89% accuracy in image recognition, while a quantum-enhanced system reached 95.5% accuracy with just 3,292 parameters.

Problems get tougher as datasets grow larger and more complex. Classical algorithms run into computing limits that even the most powerful regular hardware can’t solve. This becomes a big problem especially with optimization tasks that have many variables or simulations that need exponential computing power.

Opportunities in quantum machine learning

Quantum machine learning brings several advantages over classical methods. Parameter efficiency leads the way – quantum models deliver similar or better results with fewer moving parts. To name just one example, see how a Quantum CNN achieved 89% accuracy on a 12×12 image classification task using only 926 parameters, while the classical version needed 3,681 parameters to reach 93% accuracy.

Quantum approaches shine at transfer learning. Tests show that quantum transfer learning techniques hit accuracy rates above 97% when moving knowledge between datasets. These results beat classical methods, especially with smaller datasets or limited computing resources.

Quantum kernels show great promise in improving model performance. Some applications saw quantum-enhanced models perform 10% better than classical ones. This suggests quantum approaches might find patterns that regular algorithms miss completely.

Industries already exploring quantum AI

Many sectors are finding practical uses for quantum AI:

| Industry | Quantum AI Application | Observed Advantage |

| Computer Vision | Image classification with quantum CNNs | Better performance on smaller datasets (58% vs 40% for 4×4 images) |

| Financial Services | Optimization problems | Enhanced pattern recognition in complex datasets |

| Healthcare | Drug discovery | Improved molecular simulations |

| Manufacturing | Supply chain optimization | More efficient resource allocation |

Systems like Quantum-Train (QT) show how quantum processing and neural networks can work together. Photonic implementations look particularly promising in test environments.

Data scientists who want to stay at the vanguard of their field need to understand quantum AI’s principles and applications. These technologies are moving from theory into practical tools that offer real benefits.

Building Hybrid Quantum-Classical Models

Extracted Image from Notton et al., 2015 –

Establishing Baselines for Photonic Quantum Machine Learning:…

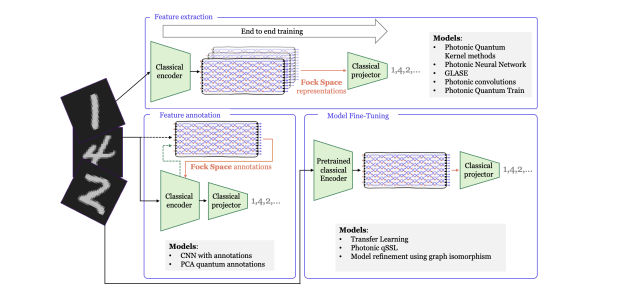

Modern quantum computing applications combine classical and quantum approaches to create practical solutions that use both computing methods. Hybrid quantum-classical models show this combination at work. Data scientists can now access quantum advantages without needing fully developed quantum hardware.

What is a hybrid model?

A hybrid quantum-classical model blends traditional computing systems with quantum circuits to boost performance and handle quantum hardware limits. These systems split tasks between classical and quantum processors based on what each does best. Classical parts take care of data prep, parameter control, and results processing. Quantum components run specialized operations that use quantum mechanical properties.

How quantum circuits integrate with neural networks

Quantum circuits and neural networks come together in several ways:

Parametrized Quantum Kernels (PQK) work like quantum versions of classical neural network layers. Tests show single-layer PQK models hit 92.58% accuracy on image classification tasks. They do this with far fewer parameters than classical models.

Quantum Convolutional Neural Networks (QCNN) create quantum versions of convolution operations. QCNNs reached 89% accuracy on 12×12 image classification tasks with just 926 parameters. Classical CNNs needed 3,681 parameters to get similar results.

Quantum-Train (QT) architecture uses quantum circuits to generate parameters for neural networks. QT variants with D=10 dimensions reached 95.50% accuracy using 3,292 parameters. Classical models need twice as many parameters to match this performance.

Popular frameworks and tools for quantum ML

Here are the leading quantum machine learning platforms:

| Framework | Specialization | Implementation Example |

| Merlin | Hybrid model training | QCNN implementation |

| Qiskit | IBM quantum systems integration | Transfer learning experiments |

| Quantum Tensor Networks | Dimensionality reduction | Hybrid quantum-classical algorithms |

| Perceval | Photonic quantum computing | Demonstrating 96.50% accuracy |

Challenges in training hybrid models

Training hybrid models comes with unique challenges. Parameter optimization needs special approaches like Simultaneous Perturbation Stochastic Approximation (SPSA). This happens because gradient computation works differently from classical systems. Quantum decoherence limits how deep and complex circuits can be, so architects must design carefully.

Balancing resources between classical and quantum parts is also vital. Models with too many quantum components run slowly. Those with too few quantum elements don’t offer advantages over classical approaches.

Tests with QCNNs on 4×4 images showed 58% accuracy compared to classical CNNs at 40%. This advantage disappeared with larger 12×12 images where classical CNNs performed better (93% versus 89% for QCNNs). Finding the right balance between classical and quantum elements remains a vital challenge to develop effective hybrid models.

Real-World Applications and Use Cases

Ground applications of quantum AI now show measurable advantages in many fields. We’re moving past theory into practical uses that deliver real benefits

Quantum-enhanced image recognition

Tests with Quantum Convolutional Neural Networks (QCNNs) show impressive gains in image classification. QCNNs achieved 58% accuracy for small 4×4 images compared to classical CNNs at 40%. They did this while using fewer parameters (126 versus 165). Classical CNNs still held a slight edge with larger 12×12 images at 93% versus 89% for QCNNs. However, the quantum approach needed far fewer parameters (926 versus 3,681).

The results get even better with Quantum-Train (QT) architectures. With dimension D=4, they reached 93.29% accuracy using only 688 parameters. This nearly matches classical models’ 96.89% accuracy that needed 6,690 parameters. Quantum models deliver similar results while using just a fraction of computing power.

Optimization problems in logistics

Supply chain optimization is perfect for quantum computing because of its complex combinations. Quantum algorithms shine here by exploring multiple solutions at once through superposition. They can find the best routes, schedules, and resource plans that classical computers miss. These improvements lead directly to lower costs and better efficiency.

Financial modeling with quantum AI

The finance industry benefits from quantum computing’s power to model complex market behaviors and assess risks. Quantum Monte Carlo methods work better for pricing options and optimizing portfolios. Financial institutions can utilize these capabilities to study correlation patterns in various assets. Therefore, they make smarter investment choices while managing risk better than traditional methods allow.

Drug discovery and molecular simulations

Quantum computing shows great promise in pharmaceutical research by modeling molecular interactions at new scales. Traditional methods needed massive computing power to model protein folding or drug-molecule interactions accurately. Quantum approaches can cut discovery times from years to months. They map molecular behavior to quantum states efficiently and speed up the search for promising compounds to treat various diseases.

Getting Started: A Practical Guide for Data Scientists

Your quantum machine learning trip starts with picking the right platform and designing experiments. You just need to know specialized tools and methods to implement quantum AI concepts.

Choosing the right quantum platform

Several quantum computing frameworks give distinct advantages based on your specific needs. We used MerLin, because it excels in hybrid quantum-classical model development, while Qiskit lines up well with IBM quantum systems. Perceval supports photonic quantum computing implementations and showed 96.5% accuracy on certain tasks. Quantum Tensor Networks give specialized capabilities for dimensionality reduction applications. You should pick platforms that match your hardware access and application requirements.

Setting up your first quantum ML experiment

Complex problems come later – start simple. The first step is to reduce high-dimensional classical datasets through techniques like PCA before quantum processing. Your image classification tasks should begin with smaller images (4×4 or 12×12), as quantum advantages work better with reduced dimensionality. Hybrid approaches like Quantum-Train (QT) are a great way to get results by combining parametrized quantum circuits with neural networks, reaching 93.29% accuracy with only 688 parameters.

Tips for working with quantum datasets

Raw data cannot directly enter quantum systems, so preprocessing becomes crucial. Dimension reduction techniques keep essential information while making datasets quantum-compatible. The process involves converting classical data to quantum states through amplitude encoding or feature maps. Mode selection matters – use 10 for simple classification and 24 for complex tasks.

Best practices for debugging quantum models

Keep track of both classical and quantum accuracy during development. Small subset testing should happen often before scaling up. Your quantum model should match classical performance (92-98% versus classical 95-99% in transfer learning scenarios).

Resources to learn quantum programming

Recent publications in Physical Review A, Optica Quantum, and specialized quantum computing journals form the foundations of essential reading. Practical implementations combine theoretical knowledge with hands-on experimentation. Quantum simulators are available as starting points before you access actual quantum processing units (QPUs).

Conclusion

Quantum computing is reshaping data science practices with its incredible computational power. Our exploration shows how quantum principles like superposition and quantum gates open up new ways to process information. The efficiency of quantum models is a big deal as it means that they need fewer parameters than traditional systems. Quantum CNNs prove this by matching accuracy levels with far fewer parameters than their classical counterparts.

Right now, the best way forward combines quantum circuits with neural networks through hybrid approaches. These systems blend the reliability of classical computing with quantum advantages. Data scientists need to master both systems instead of seeing them as rivals.

Quantum AI applications show real benefits in image recognition, financial modeling, logistics optimization and drug discovery. In spite of that, some hurdles remain. Teams need to think over quantum decoherence, resource distribution between classical and quantum parts, and specialized parameter optimization techniques to develop effective solutions.

Data scientists who want to add quantum computing to their skillset should start with simple problems. They should use smaller datasets where quantum advantages shine. Tools like Merlin, PennyLane, Qiskit, and Perceval are available to help you experiment.

What started as a theoretical promise is now becoming reality in quantum computing. Though still new, quantum AI shows high efficiency gains compared to classical methods. Of course, data scientists who build expertise now will have an edge as quantum technologies grow and blend into mainstream computing workflows. Success awaits professionals who can connect classical and quantum systems to create solutions that employ the best of both worlds.

Key Takeaways:

Quantum computing is revolutionizing data science by offering unprecedented computational advantages through quantum mechanics principles like superposition and quantum gates.

- Quantum models achieve superior parameter efficiency – QCNNs reach 89% accuracy with only 926 parameters while classical CNNs need 3,681 parameters for 93% accuracy

- Hybrid quantum-classical approaches offer the most practical implementation path – combining classical reliability with quantum advantages for real-world applications

- Start small with dimensionally reduced datasets – quantum advantages are most pronounced on smaller problems like 4×4 images where QCNNs outperform classical models

- Multiple industries are already benefiting – from image recognition and financial modeling to drug discovery and logistics optimization

- Use established frameworks like Merlin, PennyLane, Qiskit, and Perceval – these platforms provide accessible entry points for experimenting with quantum machine learning

The convergence of quantum computing and AI represents a paradigm shift that data scientists cannot ignore. While challenges like quantum decoherence and specialized optimization exist, the demonstrated efficiency gains make quantum AI a crucial skill for future-ready professionals.

The full paper is now available on Arxiv!